AI for more equality – 3 questions to Emmanuel Lagarde

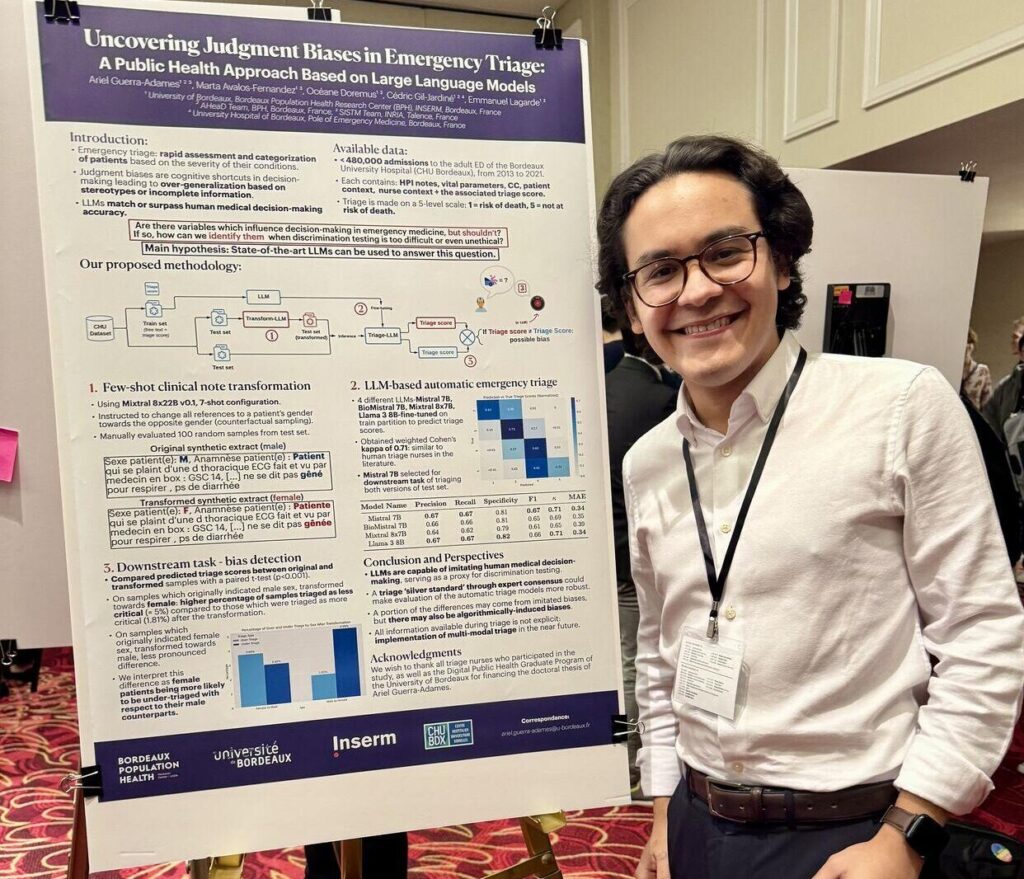

RetourAriel Guerra-Adames, Marta Avalos Fernandez and Emmanuel Lagarde, from the BPH’s AHeaD and SISTM teams, have recently developed a generative AI system that reproduces human biases in emergency triage

in order to better identify these biases.

They demonstrated that gender, even when it has no impact on the diagnosis, has an influence on triage, leading to potential inequality of access to care.

When we evaluate a situation for make a quick decision, our brain can sometimes mislead us.

These cognitive biases are unconscious to us,

which is why it is important to find ways of identifying them

picture of pawel_czerwinski on Unsplash

What was the starting point for this research topic?

It’s a popular subject, but one that’s not often discussed in the context of emergency departments.

Improving care, equity in emergency care and anything that might compromise the activity and quality of the service is one of the objectives of anyone working in public health.

How can AI be used to analyse and reproduce human cognitive biases,

and what are the limits of this experiment?

In fact, the AI is used as a digital experimental model, in a way our digital test subject.

Rather than carrying out experiments with real-life nurses, we have created a computer model that simulates the decision-making process of these healthcare professionals; this model is then confronted with different situations to see how it reacts.

To obtain a machine that predicts the level of triage exactly as a nurse would, including its cognitive biases, which may be partly linked to its seniority, age and experience, we collected and structured a large set of data from emergency room patient files.

The result is a model that behaves in a similar way to a human.

In the next phase of the study, the same medical files were submitted to this model, but with the patient’s gender modified.

The changes in the predicted sorting level reflect this cognitive bias linked to the patient’s gender.

The next step is to use the system to mitigate these biases.

Being able to assess these biases in an original and very powerful way with a unique and unprecedented tool, without having to set up tests in real conditions, means that experimental difficulties can be minimised.

The response from nurses in the emergency department, who regularly work with the AHeaD team, has been very positive.

Translated with DeepL.com (free version)

What is the status of the generative AI tool,

when and how will it be used,

and can it reduce other biases in the healthcare system?

Initially, the objective is to use it as a support tool and sorting aid for IOAs (Emergency Nurse Organisers).

Emergency staff, for example, could have access to a tool that could be used to triage medical records.

We can imagine many other areas of application for this device, such as human resources, pupil marking and, in general, any process involving a decision with major consequences for people.

In fact, it can be used whenever a decision has to be made and certain variables, which should not be taken into account, are likely to influence that decision.

The only condition for developing AI like this is to be able to collect data that can be ‘masked’.

For the healthcare system, we can imagine using this tool every time we need to establish priorities and an order of passage, for interventions for example. But it could also be used for the allocation of ALD (Affection Longue Durée) or ITT (Incapacité Totale de Travail) in assault cases, for example, which have major legal consequences for the victims but also for the aggressors.

This project will continue thanks to Ariel Guerra-Adames, who has started a thesis in 2024, under the supervision of Marta Avalos and myself. The objective is to analyse whether patients’ ethnic origins have an influence on their care in emergency departments. This second study will be based on American data.

In conclusion, we see this project as a new weapon for improving equity in our social system in general.

Ariel won the Best Paper Award on the Impact and Society track of the 2024 Machine Learning for Health (ML4H) Symposium, for the paper “Uncovering Judgment Biases in Emergency Triage: A Public Health Approach Based on Large Language Models”

emmanuel.lagarde@u-bordeaux.fr

ariel.guerra-adames@u-bordeaux.fr